GUIding ML Buy vs build

Challenge: Determine user criteria to guide build vs buy decisioning for a Fine-Tuning product for Machine Learning.

My Role: As a Senior Service Designer I joined the team after research to build critical user criteria and tool considerations.

Team Structure: Senior Service Designer (me), Product Designer, Product Team, ML Engineers

Impact: Established user criteria for buy vs build leadership decision making.

Summary: Our internal tooling product team came to us after completing an extensive ML research effort to help determine tooling strategy for an acute moment in the ML process. Our scope focused on the Fine-Tuning moment in the process. While we had an internal tool available for Fine-Tuning, the product team had already seen issues arise with adoption and scalability across other potential use cases. The Fine-Tuning needs at Spotify were few but with growing ML needs, were likely to scale fast and the team needed to be positioned well for rapid adoption.

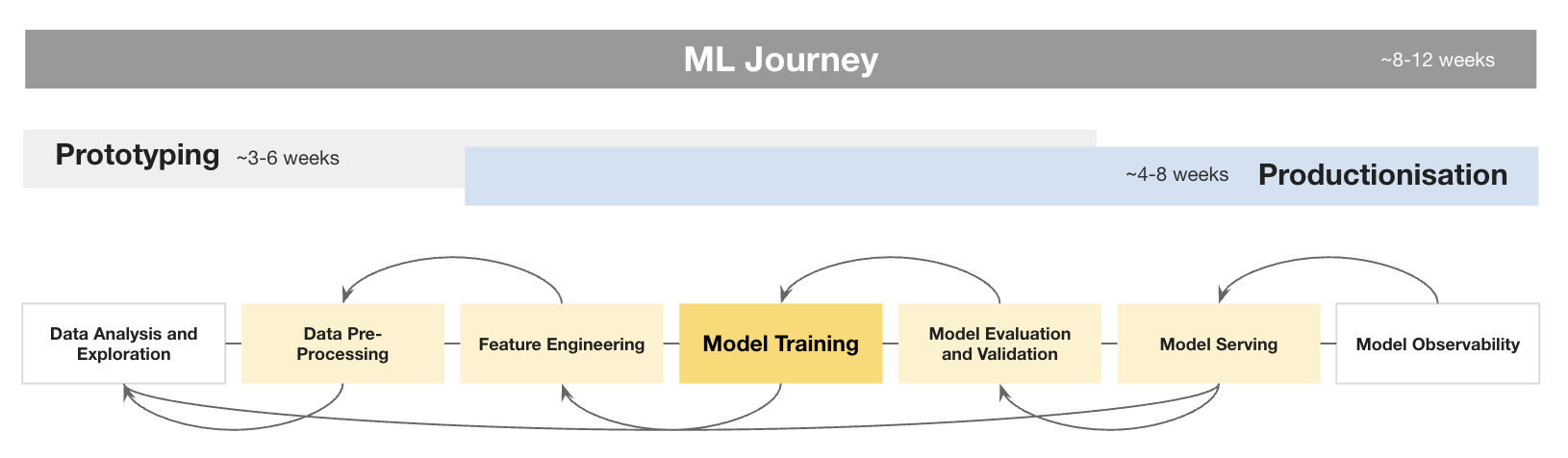

The Machine Learning process

Below are the findings of the high level ML journey put together through a series of research efforts. The Fine-Tuning work falls under the Model Training and specifically Fine-tuning a machine learning model involves taking a pre-trained model and making adjustments to its parameters using a smaller, task-specific dataset. This process is crucial because it allows the model to adapt to the nuances of the new data, improving its performance and accuracy on specific tasks without starting from scratch. Fine-tuning leverages the knowledge the model has already gained, resulting in faster training times and often better performance, especially in scenarios where labeled data is limited. Overall, it is a vital step in creating more efficient and effective machine learning solutions tailored to specific applications.

The user findings

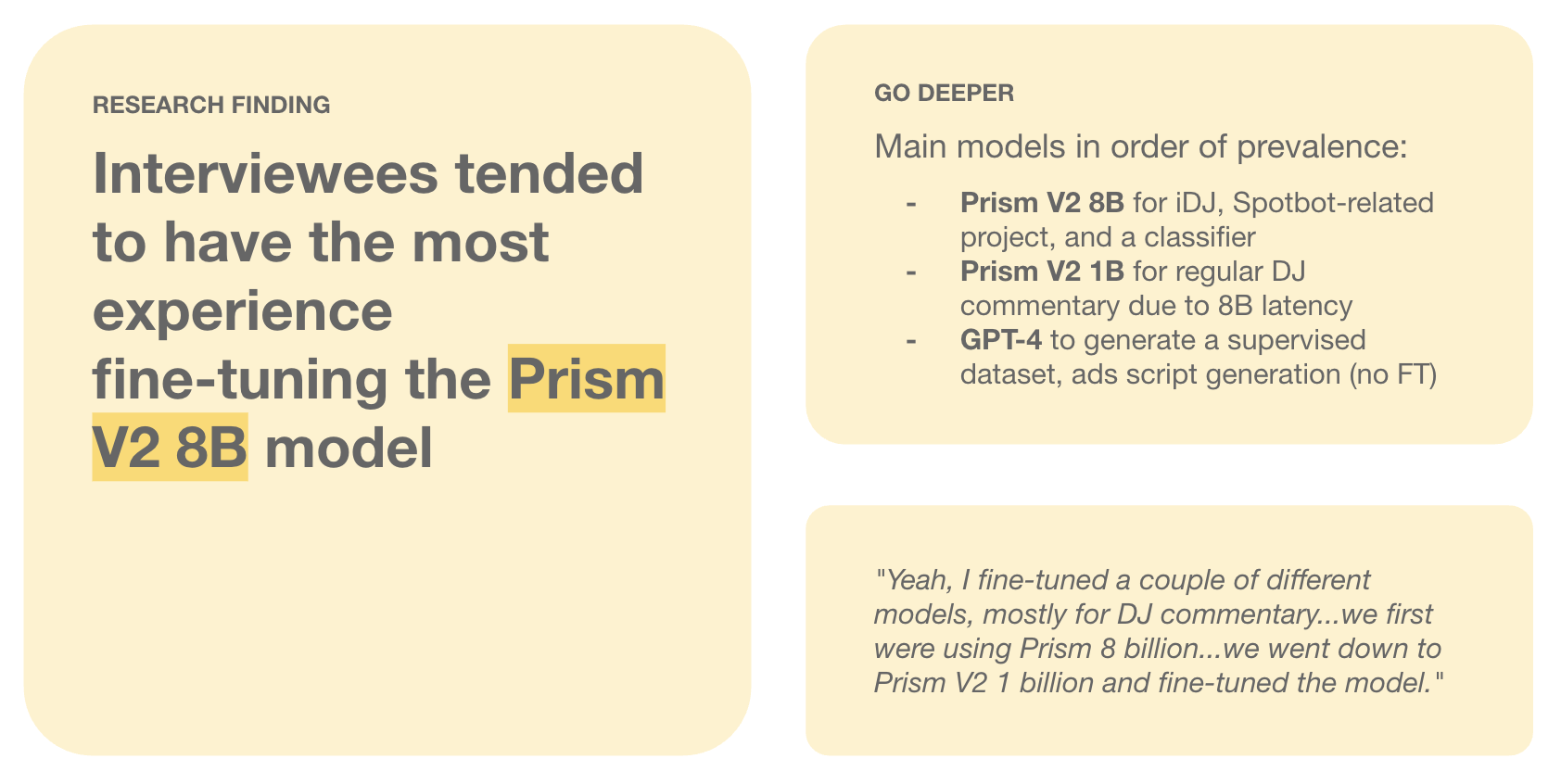

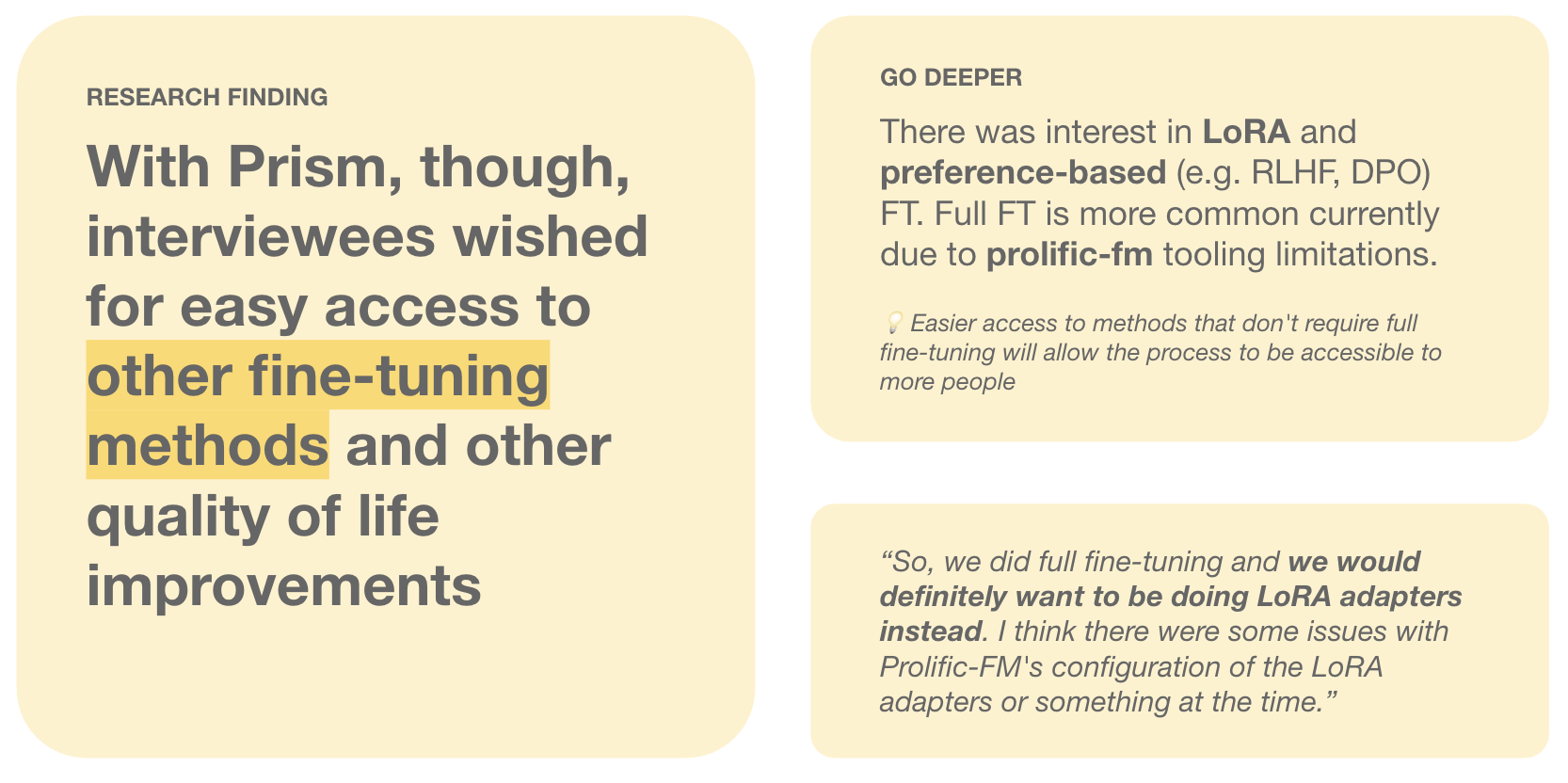

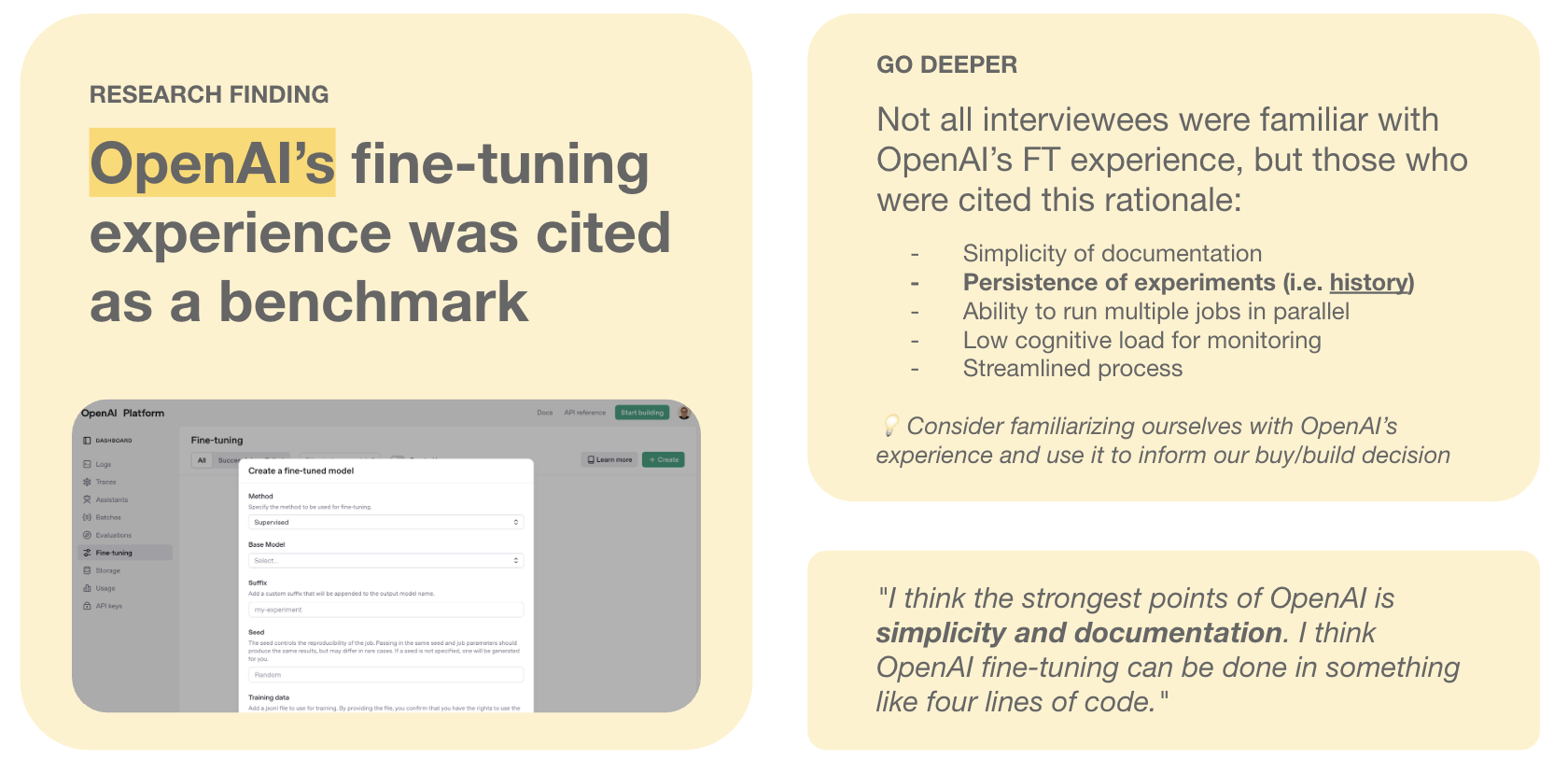

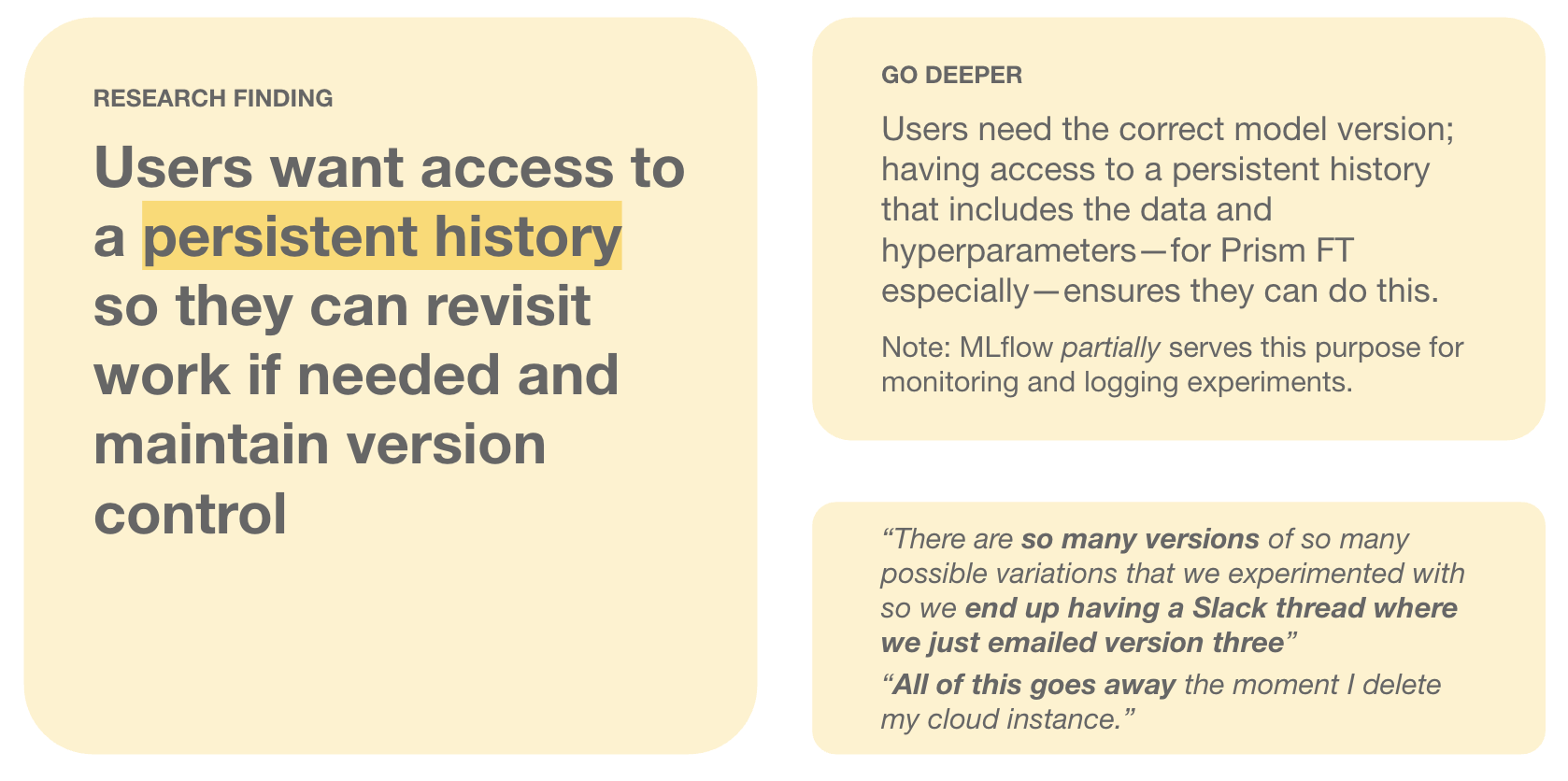

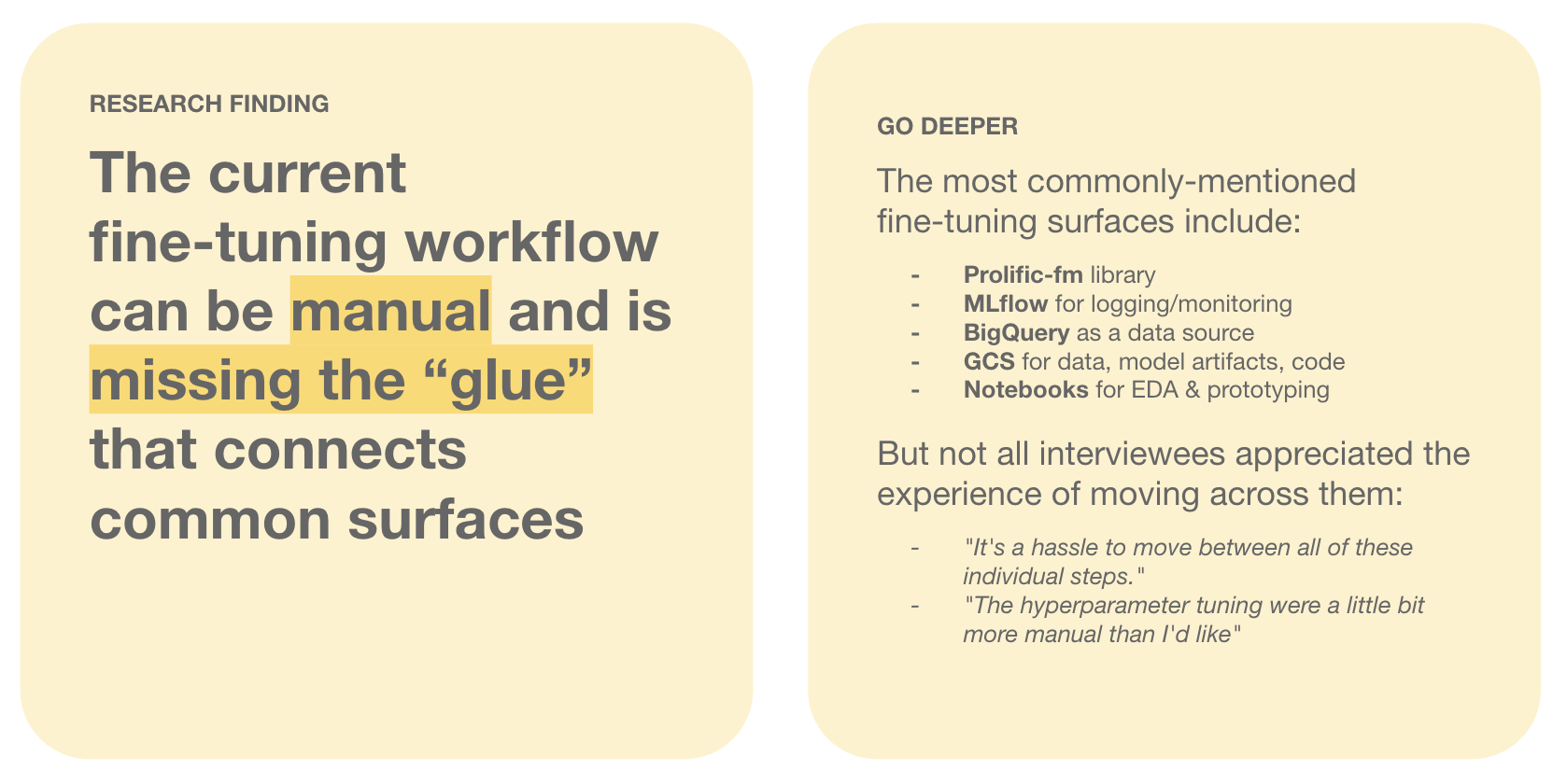

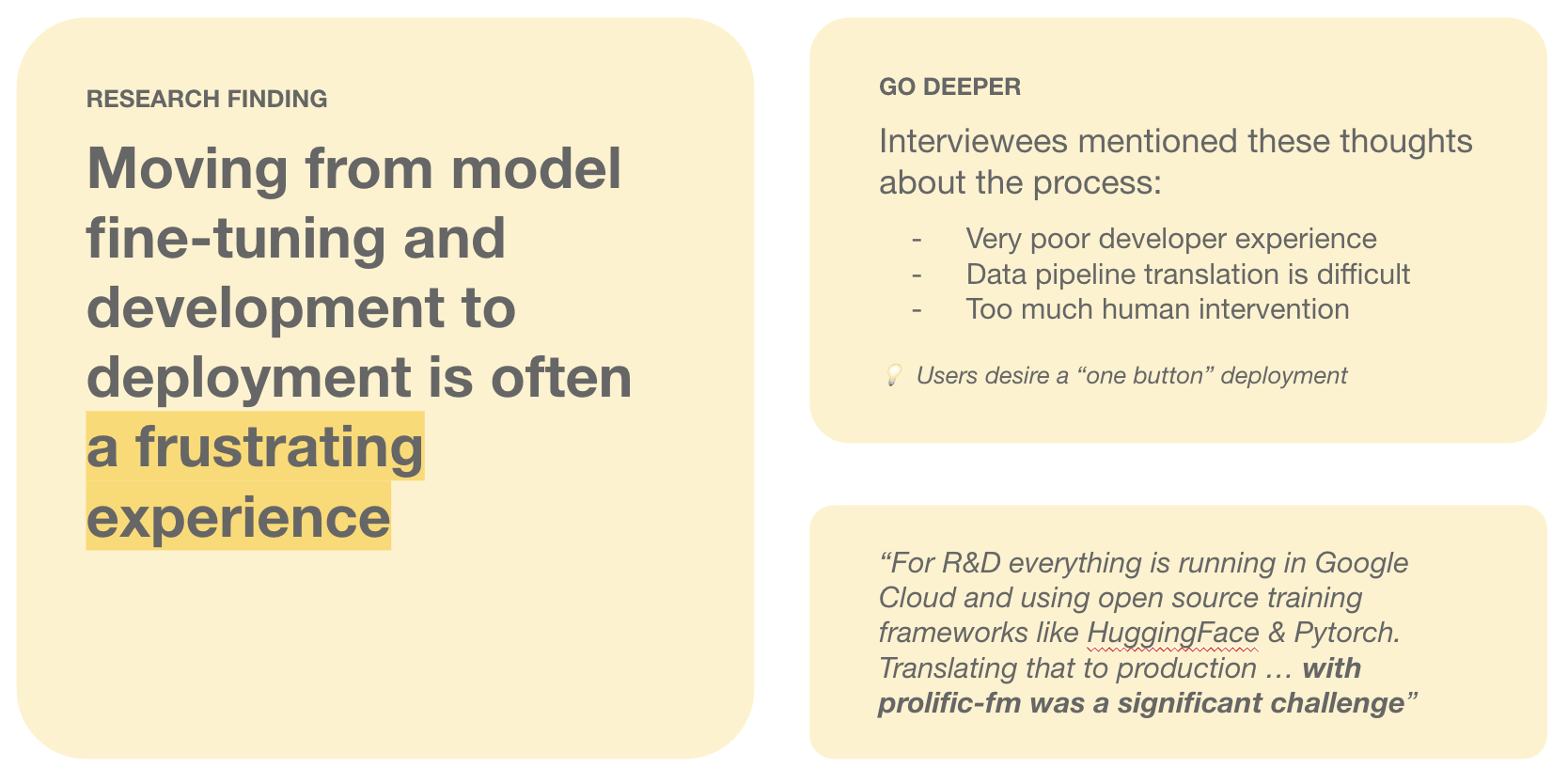

We ran a series of 10 user interviews to better assess current Fine-Tuning efforts and needs alongside potential needs and adoption. We had 6 robust findings that built on each other and drove our final criteria. While we initially had an assumption that those Fine-Tuning at Spotify were all using the same model, we found in the research that it was only due to its availability not its usability. Instead, users naturally referenced Open-AI’s Fine-Tuning experience as a benchmark for usability. This was primarily because of the ease and accessibility of the UI but also for its ability to contain version history. Having version history was essential to be able to freely adjust the model without lasting consequences. Users also sited wanting to have a variety of fine-tuning models, given the newness of the tools and the diversity of limitations that each tool has. Finally, we found that beyond the potential tool assessment, we also needed to consider its ability to work within the ecosystem since a major pain point users brought up was the amount of manual workload caused by transferring between products. We needed something that could connect common surfaces.

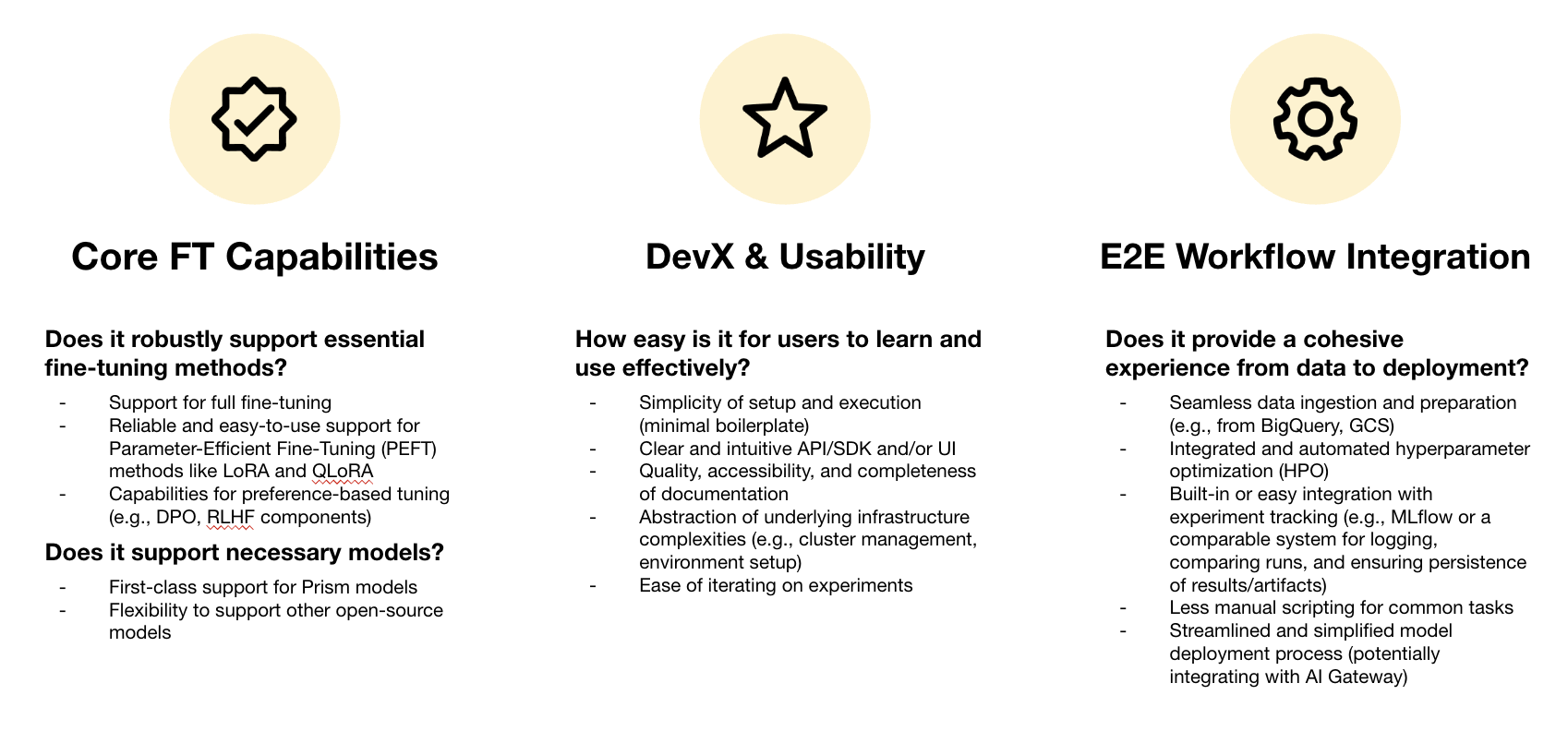

The user criteria

We synthesized the research and came up with a list of foundational needs and a list of potential “deal sweeteners” for added consideration. Some of the critical needs included a tool that has a variety of methods that don’t all require full Fine-Tuning, an Open-AI like seamless experience and UI, access to version control, and an integrated end-to-end flow. Many of our “deal sweeteners” focused around potential UI features. For instance, many users asked for a one-click Fine-Tuning job that could simplify the workload and cognitive load. Users were also hopeful to include any AI enhancements as it could help them through suggested actions and evaluation support. Below are the final criteria we delivered to the leadership team for determining to buy or build.