Designing a Spotify AI-POWERED AUTHENTICATION experience

Challenge: Create a net new passwordless authentication experience using machine learning voice recognition to enhance security while increasing user ease for Spotify app login.

My Role: As the Sr UX Designer, I designed the end-to-end authentication system architecture, established the interaction patterns and design principles for AI-powered authentication, partnered with data science on model requirements, and created the scalable framework that would support voice authentication across Spotify's expanding product surfaces."

Team Structure: Sr UX Designer (me), Data Scientist, Engineers

Impact: Designed authentication system achieving 90% model accuracy and 3x faster login—establishing the foundational patterns to scale to CarPlay, wearables, and shared device experiences. Currently launching.

Summary: As Spotify expanded across devices and surfaces, traditional authentication created friction and inconsistency. I designed the foundational AI-powered authentication system that would work coherently across mobile, CarPlay, wearables, and future products—championing voice recognition as the scalable primitive that could unify login experiences while enhancing security. The solution was prototyped in one week and tested with employees from diverse geographic backgrounds to ensure accent compatibility and model confidence. The successful prototype demonstrated both enhanced security and improved user experience, leading to executive approval for Q4 launch.

HMW balance friction and ease to establish secure entries that don’t burden users

the Process

This wasn't just about designing a feature—it was about establishing the foundational patterns for how Spotify would handle AI-powered authentication across every surface. I needed to design a system that could scale from mobile to automotive to wearables, each with different constraints and use cases. I started by auditing the current login journey, identifying that the average user took 45 seconds to complete authentication when including password retrieval and typing errors with 70% of Spotify users having password issues within the last month. I researched voice biometric capabilities and deepfake detection methods and discovered that combining vocal pattern analysis with liveness detection could create a highly secure authentication method. The key insight was that each person's voice contains unique characteristics that are nearly impossible to replicate, even with sophisticated deepfake technology.

I mapped out user scenarios focusing on the consumer app login experience: first-time app installation, daily recurring logins, login after app updates, account recovery scenarios, shared device situations.

Working with a data scientist for a rapid survey, I found 92% of users were comfortable using voice assistants and had familiarity with the method in recent experiences across other industries. We also found that users naturally associated voice recognition with Spotify’s audio capabilities and a natural expansion of their features. This validated my hypothesis that voice could be a natural authentication method. I quickly built a prototype to bring clarity to the vision and gain buy in with engineering to help build a model that would would support the experience. I recruited Spotify employees representing our global user base to test the model - participants from North America, Europe, Asia, and Latin America ensured accent diversity. Users recorded two voice samples during testing:

A personalized phrase they choose (e.g., "My music is playing")

A randomized phrase provided by the system for additional security

Through rapid iteration we were able to improve the model to be able to identify each user immediately by their voice even when additional noise occurred and detect deepfakes with a 90% accuracy rate. Our engineers created synthetic voice samples using advanced AI tools, and the system identified all attempts. This was crucial for leadership buy-in.

DESIGNING SCALABLE AUTHENTICATION PRIMITIVES

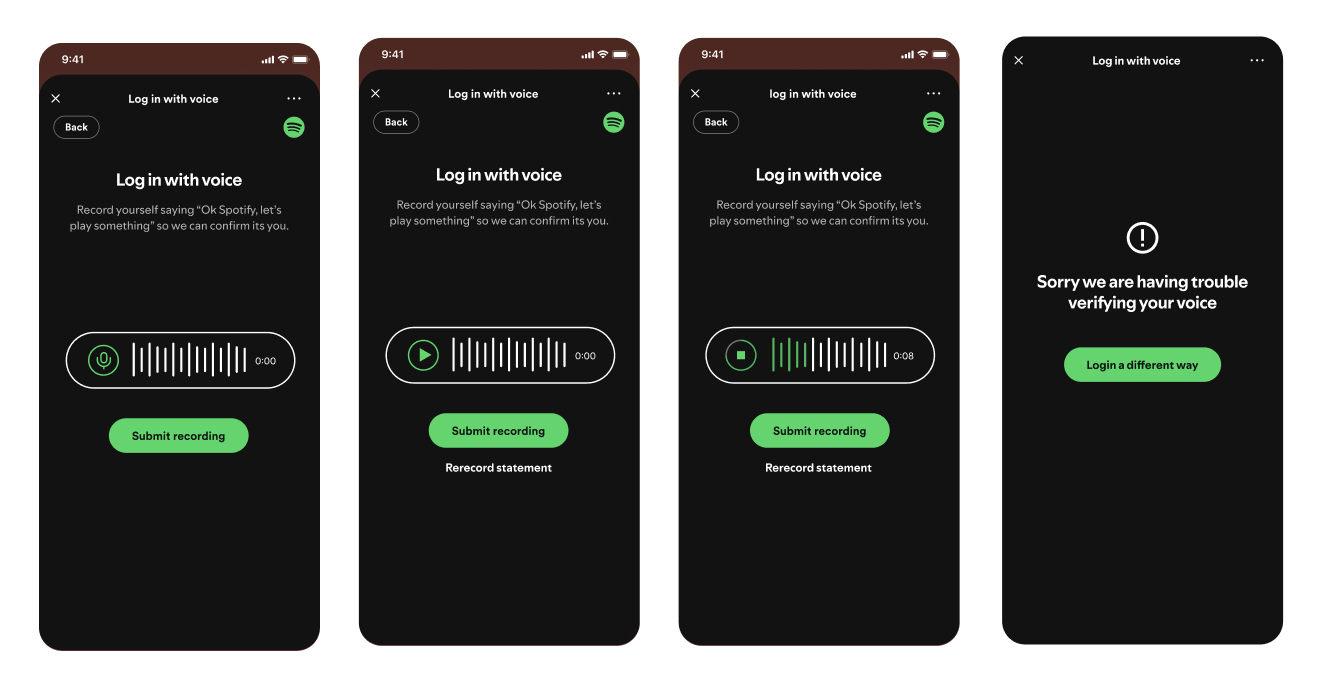

As we tested the initial prototype and model I built out a more robust and higher fidelity prototype that streamlined enrollment and authentication experiences. The core principle was "speak naturally, login naturally."

The interface guides users through optimal recording conditions with real-time feedback on audio quality. Net new components like visual waveforms respond to their voice, creating an engaging experience that doesn't feel like a security process. Each recording takes approximately 5 seconds, with the entire enrollment taking under 30 seconds.

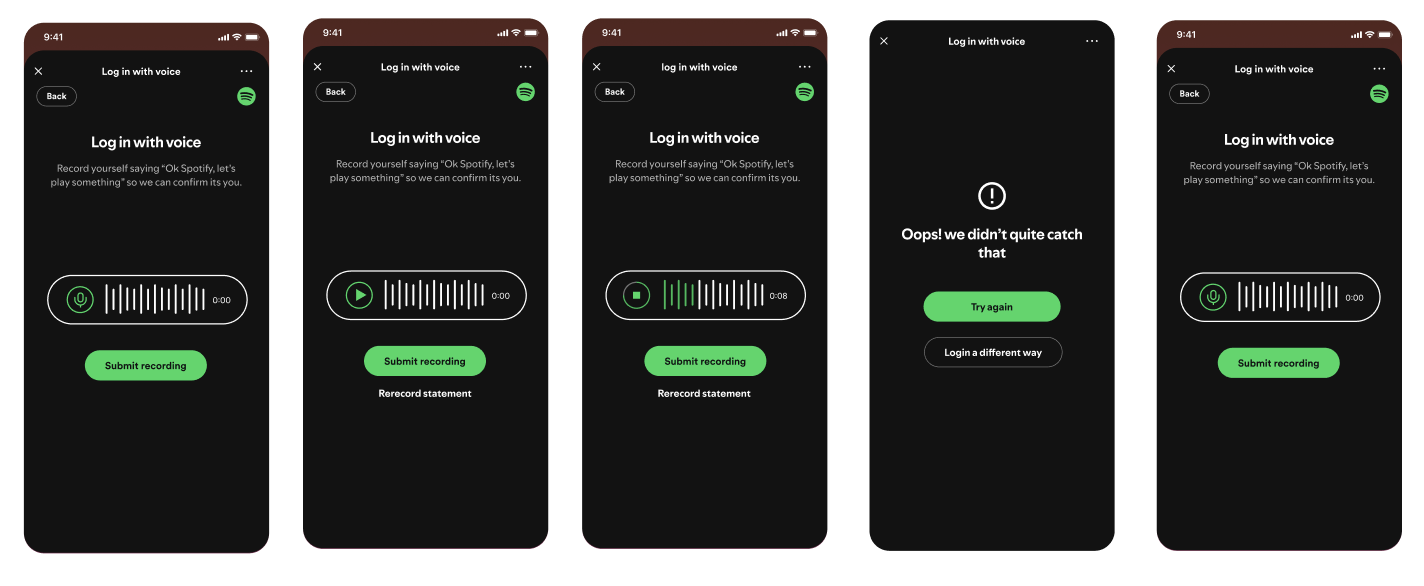

I designed fallback mechanisms as first-class system states, not edge cases—establishing the pattern that AI-powered features need transparent failure modes. This principle became part of Spotify's broader approach to AI-powered experiences:

Try Again State: When background noise interferes, the interface provides contextual guidance and prompts the user to try again.

Failure State: After two unsuccessful attempts or low model confidence the interface provides a graceful transition to email/password login.

Since Spotify already had "Hey Spotify" voice search, I ensured our authentication system complemented this existing feature. Users who regularly use voice search experience a unified voice interaction model - the same voice that searches for music also securely logs them in.

FOUNDATIONS FOR SCALE

This project established the foundational patterns for AI-powered experiences at Spotify. The authentication system I designed became organizational infrastructure—the voice interaction model, the failure states, the cross-product scalability approach all became primitives that other teams now build on. Beyond the authentication MVP, I designed the framework for scaling these patterns to CarPlay and wearables, ensuring coherence as the system expands. In demonstrating this scalability for CarPlay (hands-free, driving context) and wearables (quick access, limited screen real estate), I proved that the foundational system could adapt to radically different constraints while maintaining coherent interaction patterns. These extensions are roadmapped for the next planning cycle.

The prototype sprint proved that voice recognition could be both more secure and more convenient than traditional passwords. By focusing on natural interaction patterns and leveraging existing voice features within Spotify, I created an authentication method that feels like a natural evolution of the product. The successful deepfake detection was particularly validating, as it addressed the primary security concern stakeholders had about voice authentication. The positive employee response and quantifiable improvements in both security (90% accuracy) and satisfaction metrics (3x faster login) made a compelling case for investment. Moving forward, this feature positions Spotify at the forefront of authentication innovation, potentially eliminating one of the most common user frustrations while actually increasing account security.